This post is for all of the storage geeks out there who have followed the adventures of Backblaze and our Storage Pods over the years. The rest of you are welcome to come along for the ride.

It has been 10 years since Backblaze introduced our Storage Pod to the world. In September 2009, we announced our hulking, eye-catching, red 4U storage server equipped with 45 hard drives delivering 67 terabytes of storage for just $7,867 — that was about $0.11 a gigabyte. As part of that announcement, we open-sourced the design for what we dubbed Storage Pods, telling you and everyone like you how to build one, and many of you did.

Backblaze Storage Pod version 1 was announced on our blog with little fanfare. We thought it would be interesting to a handful of folks — readers like you. In fact, it wasn’t even called version 1, as no one had ever considered there would be a version 2, much less a version 3, 4, 4.5, 5, or 6. We were wrong. The Backblaze Storage Pod struck a chord with many IT and storage folks who were offended by having to pay a king’s ransom for a high density storage system. “I can build that for a tenth of the price,” you could almost hear them muttering to themselves. Mutter or not, we thought the same thing, and version 1 was born.

The Podfather

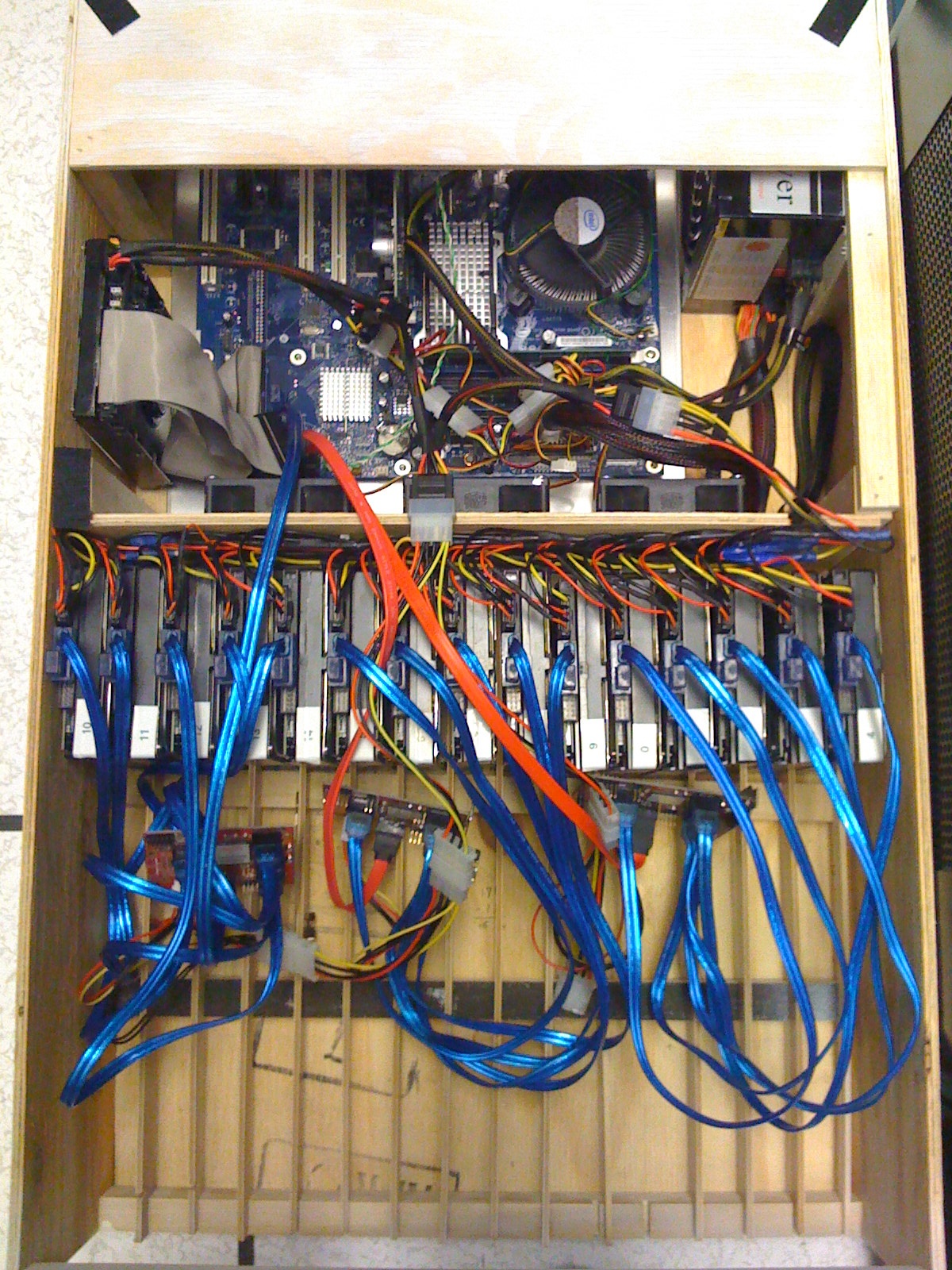

Tim, the “Podfather” as we know him, was the Backblaze lead in creating the first Storage Pod. He had design help from our friends at Protocase, who built the first three generations of Storage Pods for Backblaze and also spun out a company named 45 Drives to sell their own versions of the Storage Pod — that’s open source at its best. Before we decided on the version 1 design, there were a few experiments along the way:

The original Storage Pod was prototyped by building a wooden pod or two. We needed to test the software while the first metal pods were being constructed.

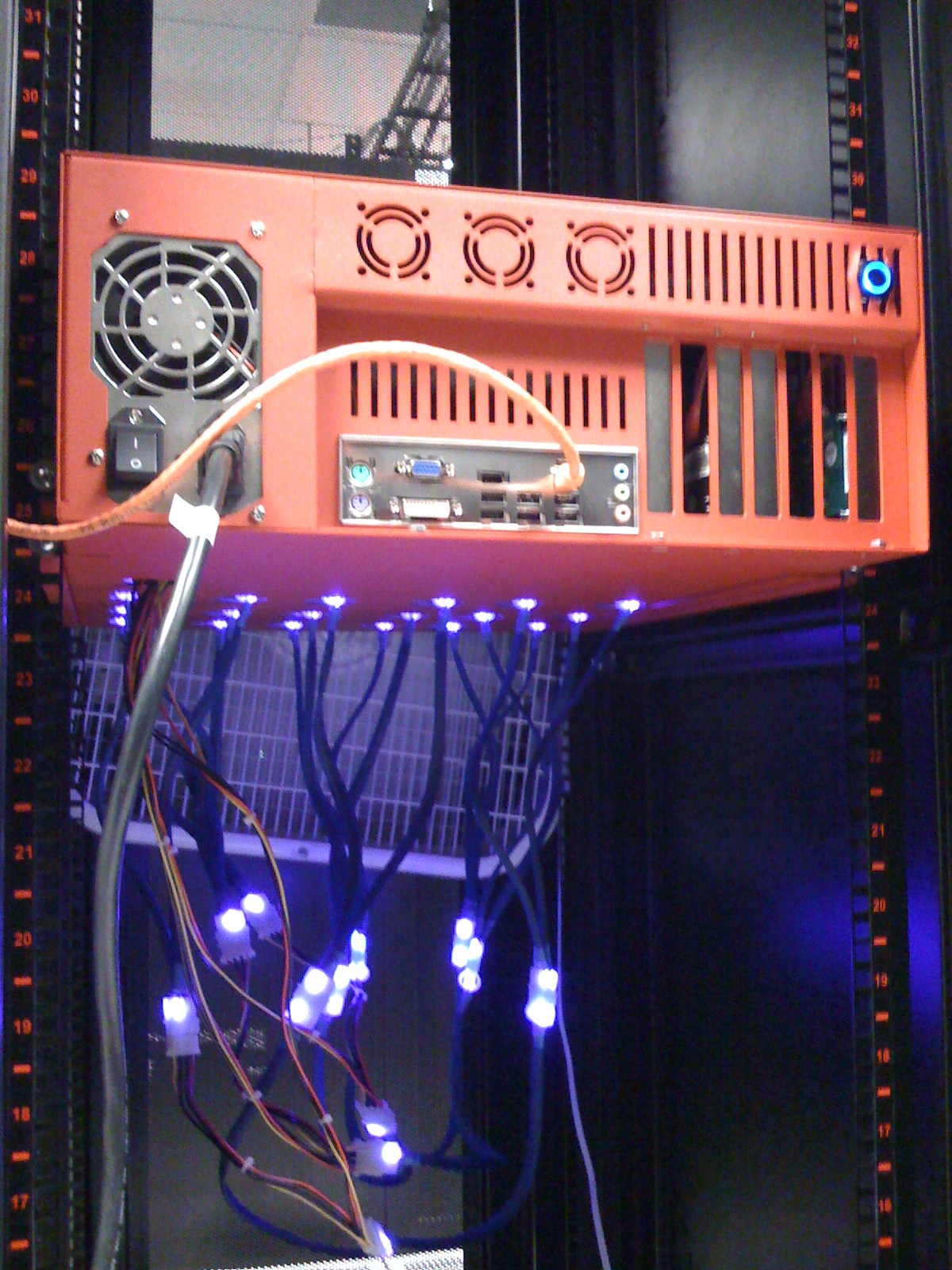

The Octopod was a quick and dirty response to receiving the wrong SATA cables — ones that were too long and glowed. Yes, there are holes drilled in the bottom of the pod.

The original faceplate shown above was used on about 10 pre-1.0 Storage Pods. It was updated to the three circle design just prior to Storage Pod 1.0.

Why are Storage Pods red? When we had the first ones built, the manufacturer had a batch of red paint left over that could be used on our pods, and it was free.

Back in 2007, when we started Backblaze, there wasn’t a whole lot of affordable choices for storing large quantities of data. Our goal was to charge $5/month for unlimited data storage for one computer. We decided to build our own storage servers when it became apparent that, if we were to use the other solutions available, we’d have to charge a whole lot more money. Storage Pod 1.0 allowed us to store one petabyte of data for about $81,000. Today we’ve lowered that to about $35,000 with Storage Pod 6.0.

We Must Have Done Something Right

The Backblaze Storage Pod was more than just affordable data storage. Version 1.0 introduced or popularized three fundamental changes to storage design: 1) You could build a system out of commodity parts and it would work, 2) You could mount hard drives vertically and they would still spin, and 3) You could use consumer hard drives in the system. It’s hard to determine which of these three features offended and/or excited more people. It is fair to say that ten years out, things worked out in our favor, as we currently have about 900 petabytes of storage in production on the platform.

Over the last 10 years, people have warmed up to our design, or at least elements of the design. Starting with 45 Drives, multitudes of companies have worked on and introduced various designs for high density storage systems ranging from 45 to 102 drives in a 4U chassis, so today the list of high-density storage systems that use vertically mounted drives is pretty impressive:

| Company | Server | Drive Count |

|---|---|---|

| 45 Drives | Storinator S45 | 45 |

| 45 Drives | Storinator XL60 | 60 |

| Chenbro | RM43160 | 60 |

| Chenbro | RM43699 | 100 |

| Dell | DSS 7000 | 90 |

| HPE | Cloudline CL5200 | 80 |

| HPE | Cloudline CL5800 | 100 |

| NetGear | ReadyNAS 4360X | 60 |

| Newisys | NDS 4450 | 60 |

| Quanta | QuantaGrid D51PL-4U | 102 |

| Quanta | QuantaPlex T21P-4U | 70 |

| Seagate | Exos AP 4U100 | 96 |

| Supermicro | SuperStorage 6049P-E1CR60L | 60 |

| Supermicro | SuperStorage 6049P-E1CR45L | 45 |

| Tyan | Thunder SX FA100-B7118 | 100 |

| Viking Enterprise Solutions | NSS-4602 | 60 |

| Viking Enterprise Solutions | NDS-4900 | 90 |

| Viking Enterprise Solutions | NSS-41000 | 100 |

| Western Digital | Ultrastar Serv60+8 | 60 |

| Wiwynn | SV7000G2 | 72 |

Another driver in the development of some of these systems is the Open Compute Project (OCP). Formed in 2011, they gather and share ideas and designs for data storage, rack designs, and related technologies. The group is managed by The Open Compute Project Foundation as a 501(c)(6) and counts many industry luminaries in the storage business as members.

What Have We Done Lately?

In technology land, 10 years of anything is a long time. What was exciting then is expected now. And the same thing has happened to our beloved Storage Pod. We have introduced updates and upgrades over the years twisting the usual dials: cost down, speed up, capacity up, vibration down, and so on. All good things. But, we can’t fool you, especially if you’ve read this far. You know that Storage Pod 6.0 was introduced in April 2016 and quite frankly it’s been crickets ever since as it relates to Storage Pods. Three plus years of non-innovation. Why?

- If it ain’t broke, don’t fix it. Storage Pod 6.0 is built in the US by Equus Compute Solutions, our contract manufacturer, and it works great. Production costs are well understood, performance is fine, and the new higher density drives perform quite well in the 6.0 chassis.

- Disk migrations kept us busy. From Q2 2016 through Q2 2019 we migrated over 53,000 drives. We replaced 2, 3, and 4 terabyte drives with 8, 10, and 12 terabyte drives, doubling, tripling and sometimes quadrupling the storage density of a storage pod.

- Pod upgrades kept us busy. From Q2 2016 through Q1 2019, we upgraded our older V2, V3, and V4.5 storage pods to V6.0. Then we crushed a few of the older ones with a MegaBot and gave a bunch more away. Today there are no longer any stand-alone storage pods; they are all members of a Backblaze Vault.

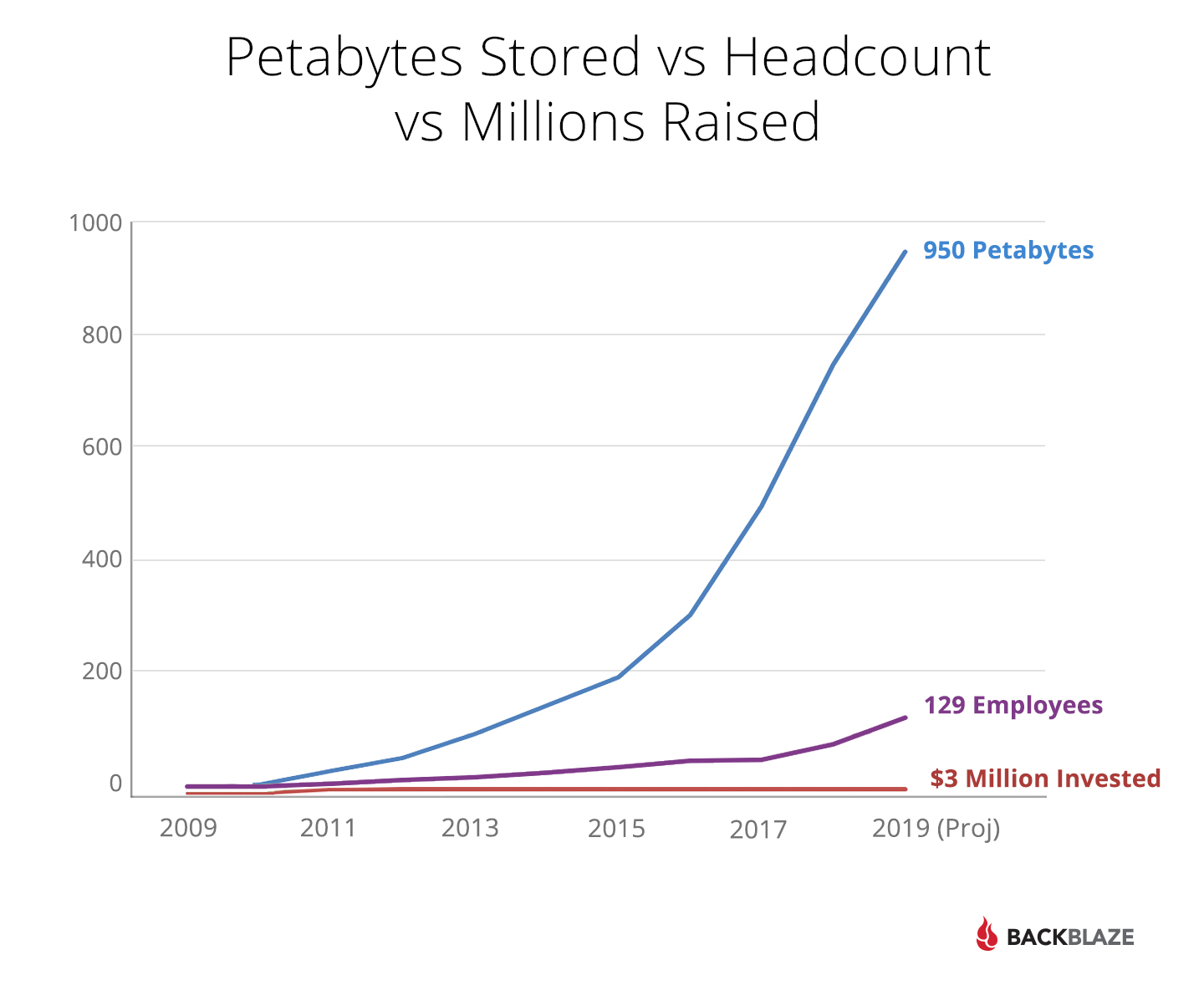

- Lots of data kept us busy. In Q2 2016, we had 250 petabytes of data storage in production. Today, we have 900 petabytes. That’s a lot of data you folks gave us (thank you by the way) and a lot of new systems to deploy. The chart below shows the challenge our data center techs faced.

In other words, our data center folks were really, really busy, and not interested in shiny new things. Now that we’ve hired a bunch more DC techs, let’s talk about what’s next.

Storage Pod Version 7.0 — Almost

Yes, there is a Backblaze Storage Pod 7.0 on the drawing board. Here is a short list of some of the features we are looking at:

- Updating the motherboard

- Upgrade the CPU and consider using an AMD CPU

- Updating the power supply units, perhaps moving to one unit

- Upgrading from 10Gbase-T to 10GbE SFP+ optical networking

- Upgrading the SATA cards

- Modifying the tool-less lid design

The timeframe is still being decided, but early 2020 is a good time to ask us about it.

“That’s nice,” you say out loud, but what you are really thinking is, “Is that it? Where’s the Backblaze in all this?” And that’s where you come in.

The Next Generation Backblaze Storage Pod

We are not out of ideas, but one of the things that we realized over the years is that many of you are really clever. From the moment we open sourced the Storage Pod design back in 2009, we’ve received countless interesting, well thought out, and occasionally odd ideas to improve the design. As we look to the future, we’d be stupid not to ask for your thoughts. Besides, you’ll tell us anyway on Reddit or HackerNews or wherever you’re reading this post, so let’s just cut to the chase.

Build or Buy

The two basic choices are: We design and build our own storage servers or we buy them from someone else. Here are some of the criteria as we think about this:

- Cost: We’d like the cost of a storage server to be about $0.030 – $0.035 per gigabyte of storage (or less of course). That includes the server and the drives inside. For example, using off-the-shelf Seagate 12 TB drives (model: ST12000NM0007) in a 6.0 Storage Pod costs about $0.032-$0.034/gigabyte depending on the price of the drives on a given day.

- International: Now that we have a data center in Amsterdam, we need to be able to ship these servers anywhere.

- Maintenance: Things should be easy to fix or replace — especially the drives.

- Commodity Parts: Wherever possible, the parts should be easy to purchase, ideally from multiple vendors.

- Racks: We’d prefer to keep using 42” deep cabinets, but make a good case for something deeper and we’ll consider it.

- Possible Today: No DNA drives or other wistful technologies. We need to store data today, not in the year 2061.

- Scale: Nothing in the solution should limit the ability to scale the systems. For example, we should be able to upgrade drives to higher densities over the next 5-7 years.

Other than that there are no limitations. Any of the following acronyms, words, and phrases could be part of your proposed solution and we won’t be offended: SAS, JBOD, IOPS, SSD, redundancy, compute node, 2U chassis, 3U chassis, horizontal mounted drives, direct wire, caching layers, appliance, edge storage units, PCIe, fibre channel, SDS, etc.

The solution does not have to be a Backblaze one. As the list from earlier in this post shows, Dell, HP, and many others make high density storage platforms we could leverage. Make a good case for any of those units, or any others you like, and we’ll take a look.

What Will We Do With All Your Input?

We’ve already started by cranking up Backblaze Labs again and have tried a few experiments. Over the coming months we’ll share with you what’s happening as we move this project forward. Maybe we’ll introduce Storage Pod X or perhaps take some of those Storage Pod knockoffs for a spin. Regardless, we’ll keep you posted. Thanks in advance for your ideas and thanks for all your support over the past ten years.

Veeam Backup to B2 Using Tiger Bridge

Veeam Backup to B2 Using Tiger Bridge