Last year, our team published a history of the Python GIL. We tapped two contributors, Barry Warsaw, a longtime Python core developer, and Pawel Polewicz, a backend software developer and longtime Python user, to help us write the post.

Today, Pawel is back to revisit the original inspiration for the post: the experiments he did testing different versions of Python with the Backblaze B2 CLI.

If you find the results of Pawel’s speed tests useful, sign up to get more developer content every month in our Backblaze Developer Newsletter. We’ll let Pawel take it from here.

—The Editors

I was setting up and testing a backup solution for one of my clients when I noticed a couple of interesting things I’d like to share today. I realized by using Python 3.9-nogil, I could increase I/O performance by 10x. I’ll get into the tests themselves, but first let me tell you why I’m telling this story on the Backblaze blog.

I use Backblaze B2 Cloud Storage for disaster recovery for myself and my clients for a few reasons:

- Durability: The numbers bear out that B2 Cloud Storage is reliable.

- Redundancy: If the entire AWS, Google Cloud Platform (GCP), or Microsoft Azure account of one of my clients (usually a startup founder) gets hacked, backups stored in B2 Cloud Storage will stay safe.

- Affordability: The price for B2 Cloud Storage is one-fifth the cost of AWS, GCP, or Azure—better than anywhere else.

- Availability: You can read data immediately without any special “restore from archive” steps. Those might be hard to perform when your hands are shaking after you accidentally deleted something.

Naturally, I always want to make sure my clients can get their backup data out of cloud storage fast should they need to. This brings us to “The Experiment.”

The Experiment: Speed Testing the Backblaze B2 CLI With Different Python Versions

I ran a speed test to see how quickly we could get large files back from Backblaze B2 using the B2 CLI. To my surprise, I’ve found that it depends on the Python version.

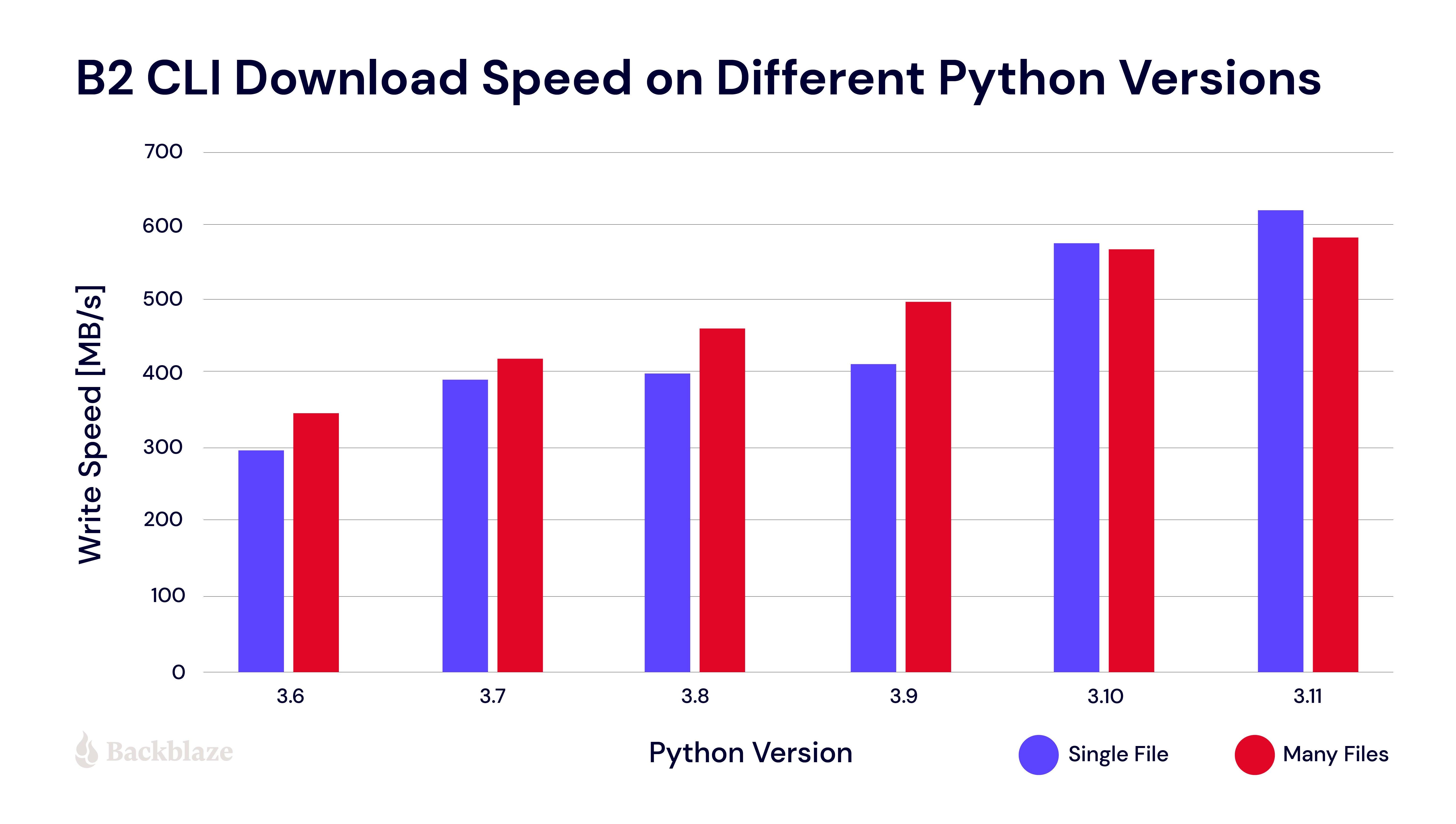

The chart below shows download speeds from different Python versions, 3.6 to 3.11, for both single-file and multi-file downloads.

What’s Going On Under the Hood?

The Backblaze B2 CLI is fetching data from the B2 Cloud Storage server using Python’s Requests library. It then saves it on a local storage device using Python threads—one writer thread per file. In this type of workload, the newer versions of Python are much faster than the older ones—developers of CPython (the standard implementation of the Python programming language) have been working hard on performance for many years. CPython 3.10 had the highest performance improvement from the official releases I’ve tested. CPython 3.11 is almost twice as fast as 3.6!

Refresher: What’s the GIL Again?

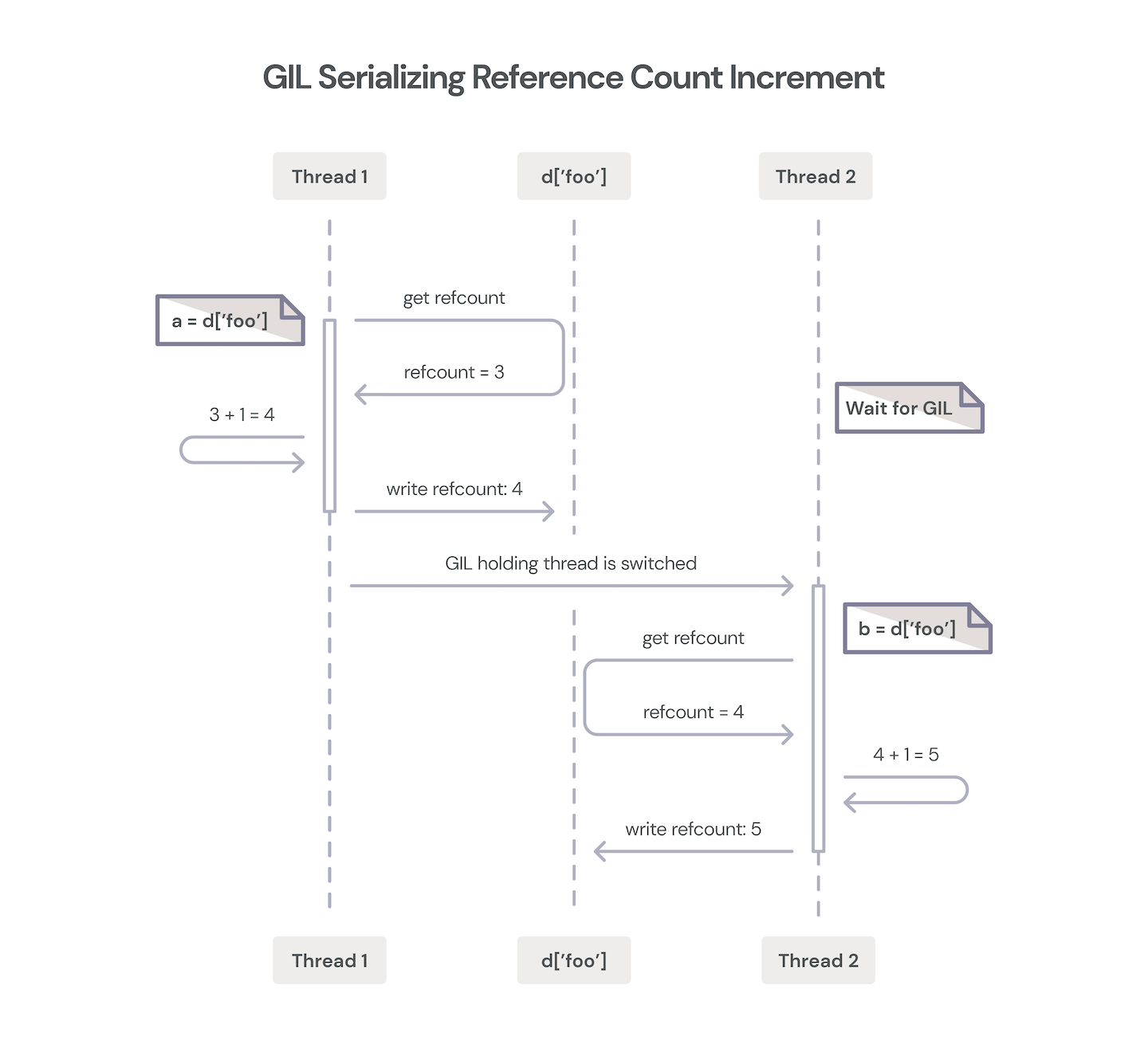

GIL stands for global interpreter lock. You can check out the history of the GIL in the post from last year for a deep dive, but essentially, the GIL is a lock that allows only a single operating system thread to run the central Python bytecode interpreter loop. It serves to serialize operations involving the Python bytecode interpreter—that is, to run tasks in an order—without which developers would need to implement fine grained locks to prevent one thread from overriding the state set by another thread.

Don’t worry—here’s a diagram.

The GIL prevents multiple threads from mutating this state at the same time, which is a good thing as it prevents data corruption, but unfortunately it also prevents any Python code from running in other threads (regardless of whether they would mutate a shared state or not).

How Did “nogil” Perform?

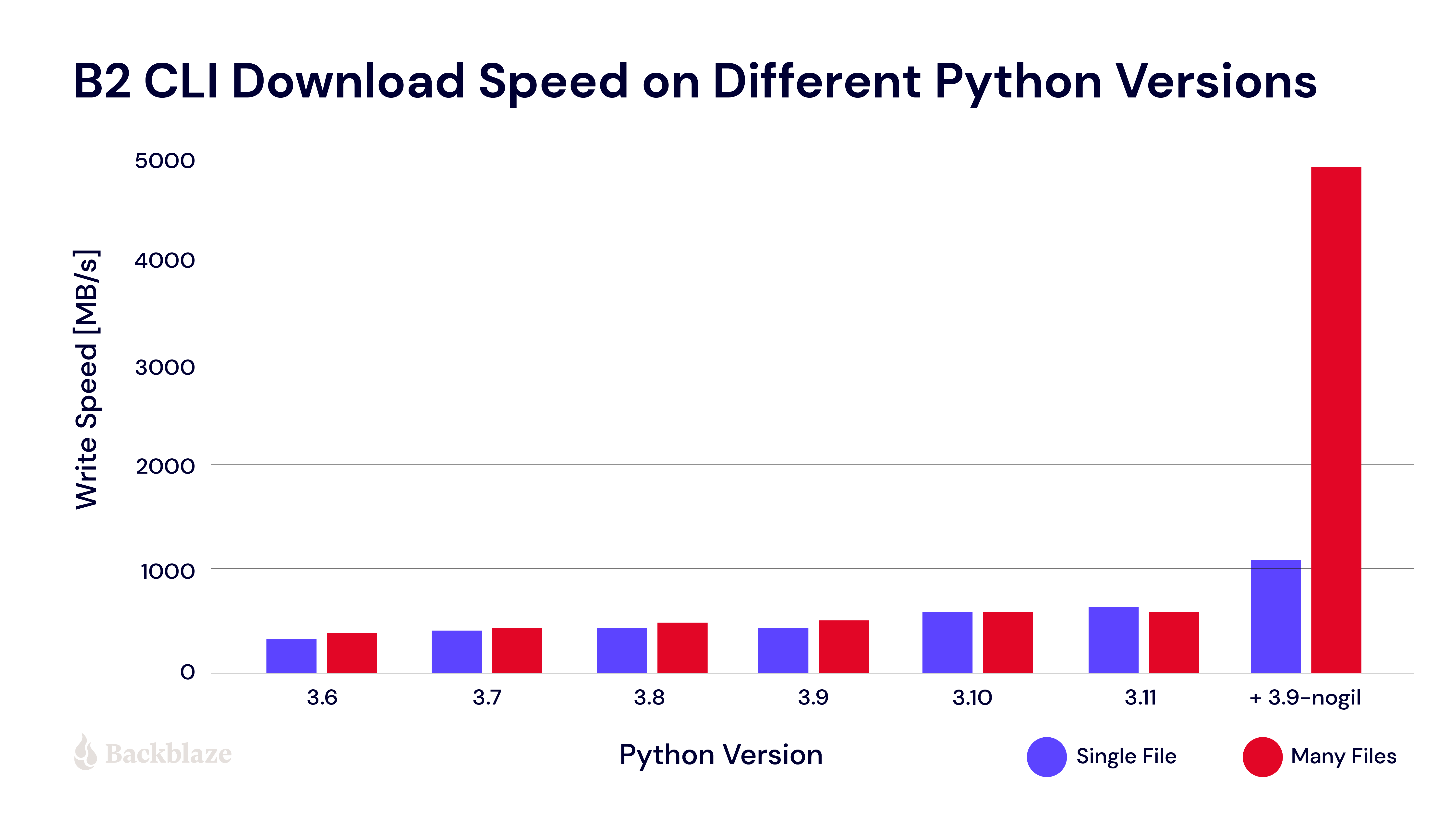

I ran one more test using the “nogil” fork of CPython 3.9. I had heard it improves performance in some cases, so I wanted to try it out to see how much faster my program would be without GIL.

The results of that test were added to the tests run on versions of unmodified CPython and you can see them below:

In this case not being limited by GIL has quite an effect! Most performance benchmarks I’ve seen show how fast the CPython test suite is, but some Python programs move data around. For this type of usage, 3.9-nogil was 2.5 or 10 times faster (for single and multiple files, respectively) on the test than unmodified CPython 3.9.

Why Isn’t nogil Even Faster?

A simple test running parallel writes on the RAID-0 array we’ve set up on an AWS EC2 i3en.24xlarge instance—a monster VM, with 96 virtual CPUs, 768 GiB RAM and 8 x 7500GB of NVMe SSD storage—shows that the bottleneck is not in userspace. The bottleneck is likely a combination of filesystem, raid driver, and the storage device. A single I/O-heavy Python process outperformed one of the fastest virtual servers you can get in 2023, and enabling nogil required just one change—the FROM line of the Dockerfile.

Why Not Use Multiprocessing?

For a single file, POSIX doesn’t guarantee consistency of writes if those are done from different threads (or processes)—that’s why the B2 Cloud Storage CLI uses a single writer thread for each file while the other threads are getting data off the network and passing it to the writer using a queue.Queue object. Using a multiprocessing.Queue in the same place results in degraded performance (approximately -15%).

The cool thing about threading is that it’s easy to learn. You can take almost any synchronous code and run it in threads in a few minutes. Using something like asyncio or multiprocessing is not so easy. In fact, whenever I tried multiprocessing, the serialization overhead was so high that the entire program slowed down instead of speeding up. As for asyncio, it won’t make Python run on 20 cores, and the cost of rewriting a program based on Requests is prohibitive. Many libraries do not support async anyway and the only way to make them work with async is to wrap them in a thread. Performance of clean async code is known to be higher than threads, but if you mix the async code with threading code, you lose this performance gain.

But Threads Can Be Hard Too!

Threads might be easy in comparison to other ways of making your program concurrent, but even that’s a high bar. While some of us may feel confident enough to go around limitations of Python by using asyncio with uvloop or writing custom extensions in C, not everyone can do that. Case in point: over the last three years I’ve challenged 1622 applicants to a senior Python backend developer job opening with a very basic task using Python threads. There was more than enough time, but only 30% of the candidates managed to complete it.

What’s Next for nogil?

On January 9, 2023, Sam Gross (the author of the nogil branch) submitted [PEP-703]—an official proposal to include the nogil mode in CPython. I hope that it will be accepted and that one day nogil will be merged into mainline, so that Python can exceed single core performance when commanded by lots of users of Python and not just those who are talented and lucky enough to be able to benefit from asyncio, multiprocessing, or custom extensions written in C.

How to Use Veeam’s V12 Direct-to-Object Storage Feature

How to Use Veeam’s V12 Direct-to-Object Storage Feature