As of the end of Q2 2022, Backblaze was monitoring 219,444 hard drives and SSDs in our data centers around the world. Of that number, 4,020 are boot drives, with 2,558 being SSDs, and 1,462 being HDDs. Later this quarter, we’ll review our SSD collection. Today, we’ll focus on the 215,424 data drives under management as we review their quarterly and lifetime failure rates as of the end of Q2 2022. Along the way, we’ll share our observations and insights on the data presented and, as always, we look forward to you doing the same in the comments section at the end of the post.

Lifetime Hard Drive Failure Rates

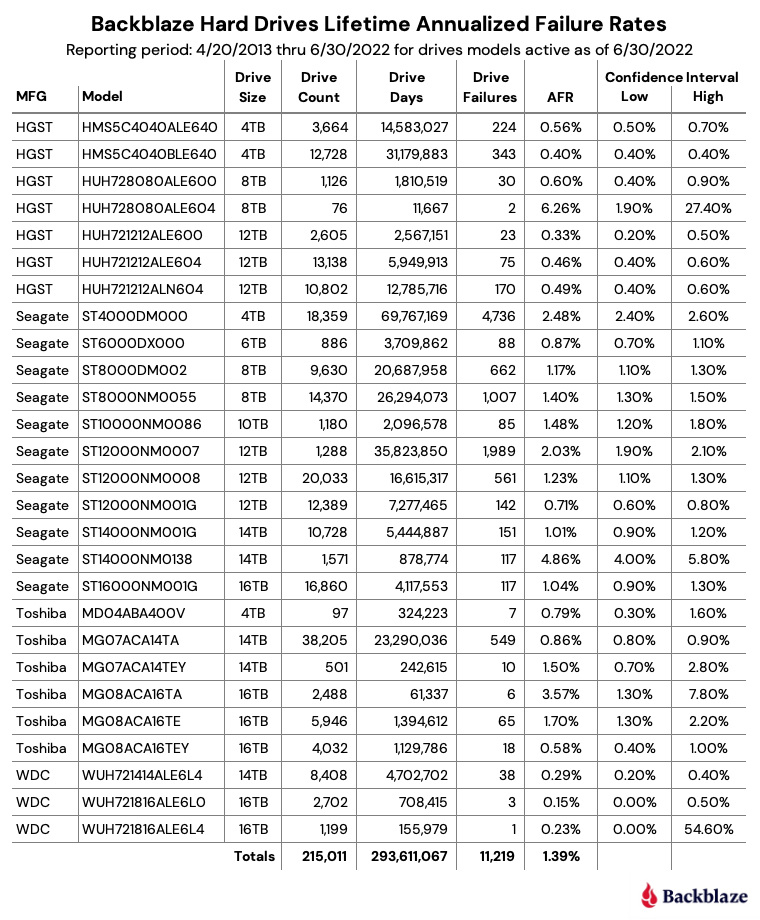

This report, we’ll change things up a bit and start with the lifetime failure rates. We’ll cover the Q2 data later on in this post. As of June 30, 2022, Backblaze was monitoring 215,424 hard drives used to store data. For our evaluation, we removed 413 drives from consideration as they were used for testing purposes or drive models which did not have at least 60 drives. This leaves us with 215,011 hard drives grouped into 27 different models to analyze for the lifetime report.

Notes and Observations About the Lifetime Stats

The lifetime annualized failure rate for all the drives listed above is 1.39%. That is the same as last quarter and down from 1.45% one year ago (6/30/2021).

A quick glance down the annualized failure rate (AFR) column identifies the three drives with the highest failure rates:

- The 8TB HGST (model: HUH728080ALE604) at 6.26%.

- The Seagate 14TB (model: ST14000NM0138) at 4.86%.

- The Toshiba 16TB (model: MG08ACA16TA at 3.57%.

What’s common between these three models? The sample size, in our case drive days, is too small, and in these three cases leads to a wide range between the low and high confidence interval values. The wider the gap, the less confident we are about the AFR in the first place.

In the table above, we list all of the models for completeness, but it does make the chart more complex. We like to make things easy, so let’s remove those drive models that have wide confidence intervals and only include drive models that are generally available. We’ll set our parameters as follows: a 95% confidence interval gap of 0.5% or less, a minimum drive days value of one million to ensure we have a large enough sample size, and drive models that are 8TB or more in size. The simplified chart is below.

To summarize, in our environment, we are 95% confident that the AFR listed for each drive model is between the low and high confidence interval values.

Computing the Annualized Failure Rate

We use the term annualized failure rate, or AFR, throughout our Drive Stats reports. Let’s spend a minute to explain how we calculate the AFR value and why we do it the way we do. The formula for a given cohort of drives is:

Let’s define the terms used:

- Cohort of drives: The selected set of drives (typically by model) for a given period of time (quarter, annual, lifetime).

- AFR: Annualized failure rate, which is applied to the selected cohort of drives.

- drive_failures: The number of failed drives for the selected cohort of drives.

- drive_days: The number of days all of the drives in the selected cohort are operational during the defined period of time of the cohort (i.e., quarter, annual, lifetime).

For example, for the 16TB Seagate drive in the table above, we have calculated there were 117 drive failures and 4,117,553 drive days over the lifetime of this particular cohort of drives. The AFR is calculated as follows:

Why Don’t We Use Drive Count?

Our environment is very dynamic when it comes to drives entering and leaving the system; a 12TB HGST drive fails and is replaced by a 12TB Seagate, a new Backblaze Vault is added and 1,200 new 14TB Toshiba drives are added, a Backblaze Vault of 4TB drives is retired, and so on. Using drive count is problematic because it assumes a stable number of drives in the cohort over the observation period. Yes, we will concede that with enough math you can make this work, but rather than going back to college, we keep it simple and use drive days as it accounts for the potential change in the number of drives during the observation period and apportions each drive’s contribution accordingly.

For completeness, let’s calculate the AFR for the 16TB Seagate drive using a drive count-based formula given there were 16,860 drives and 117 failures.

While the drive count AFR is much lower, the assumption that all 16,860 drives were present the entire observation period (lifetime) is wrong. Over the last quarter, we added 3,601 new drives, and over the last year, we added 12,003 new drives. Yet, all of these were counted as if they were installed on day one. In other words, using drive count AFR in our case would misrepresent drive failure rates in our environment.

How We Determine Drive Failure

Today, we classify drive failure into two categories: reactive and proactive. Reactive failures are where the drive has failed and won’t or can’t communicate with our system. Proactive failures are where failure is imminent based on errors the drive is reporting which are confirmed by examining the SMART stats of the drive. In this case, the drive is removed before it completely fails.

Over the last few years, data scientists have used the SMART stats data we’ve collected to see if they can predict drive failure using various statistical methodologies, and more recently, artificial intelligence and machine learning techniques. The ability to accurately predict drive failure, with minimal false positives, will optimize our operational capabilities as we scale our storage platform.

SMART Stats

SMART stands for Self-monitoring, Analysis, and Reporting Technology and is a monitoring system included in hard drives that reports on various attributes of the state of a given drive. Each day, Backblaze records and stores the SMART stats that are reported by the hard drives we have in our data centers. Check out this post to learn more about SMART stats and how we use them.

Q2 2022 Hard Drive Failure Rates

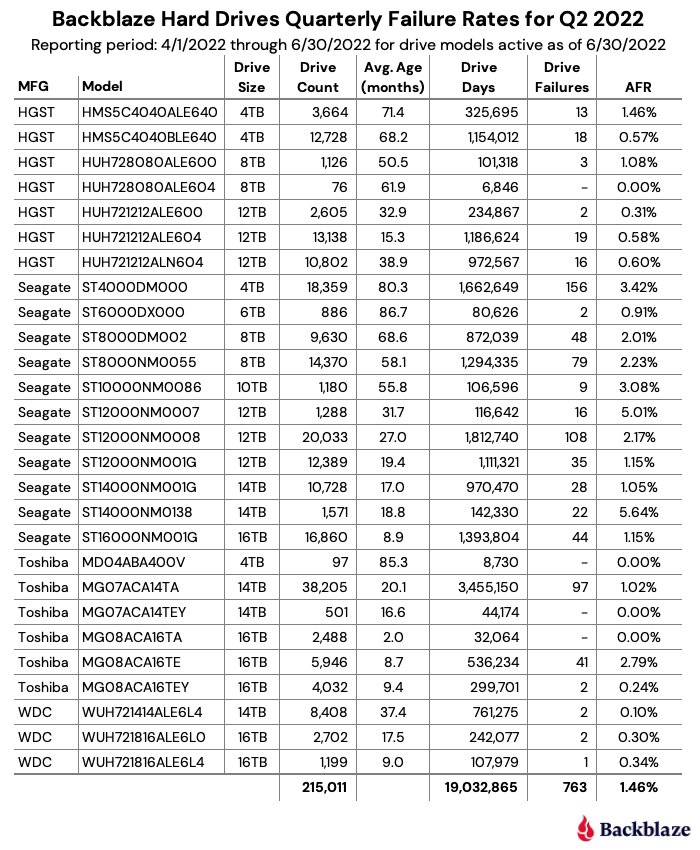

For the Q2 2022 quarterly report, we tracked 215,011 hard drives broken down by drive model into 27 different cohorts using only data from Q2. The table below lists the data for each of these drive models.

Notes and Observations on the Q2 2022 Stats

Breaking news, the OG stumbles: The 6TB Seagate drives (model: ST6000DX000) finally had a failure this quarter—actually, two failures. Given this is the oldest drive model in our fleet with an average age of 86.7 months of service, a failure or two is expected. Still, this was the first failure by this drive model since Q3 of last year. At some point in the future we can expect these drives will be cycled out, but with their lifetime AFR at just 0.87%, they are not first in line.

Another zero for the next OG: The next oldest drive cohort in our collection, the 4TB Toshiba drives (model: MD04ABA400V) at 85.3 months, had zero failures for Q2. The last failure was recorded a year ago in Q2 2021. Their lifetime AFR is just 0.79%, although their lifetime confidence interval gap is 1.3%, which as we’ve seen means we are lacking enough data to be truly confident of the AFR number. Still, at one failure per year, they could last another 97 years—probably not.

More zeroes for Q2: Three other drives had zero failures this quarter: the 8TB HGST (model: HUH728080ALE604), the 14TB Toshiba (model: MG07ACA14TEY), and the 16TB Toshiba (model: MG08ACA16TA). As with the 4TB Toshiba noted above, these drives have very wide confidence interval gaps driven by a limited number of data points. For example, the 16TB Toshiba had the most drive days—32,064—of any of these drive models. We would need to have at least 500,000 drive days in a quarter to get to a 95% confidence interval. Still, it is entirely possible that any or all of these drives will continue to post great numbers over the coming quarters, we’re just not 95% confident yet.

Running on fumes: The 4TB Seagate drives (model: ST4000DM000) are starting to show their age, 80.3 months on average. Their quarterly failure rate has increased each of the last four quarters to 3.42% this quarter. We have deployed our drive cloning program for these drives as part of our data durability program, and over the next several months, these drives will be cycled out. They have served us well, but it appears they are tired after nearly seven years of constant spinning.

The AFR increases, again: In Q2, the AFR increased to 1.46% for all drives models combined. This is up from 1.22% in Q1 2022 and up from 1.01% a year ago in Q2 2021. The aging 4TB Seagate drives are part of the increase, but the failure rates of both the Toshiba and HGST drives have increased as well over the last year. This appears to be related to the aging of the entire drive fleet and we would expect this number to go down as older drives are retired over the next year.

Four Thousand Storage Servers

In the opening paragraph, we noted there were 4,020 boot drives. What may not be obvious is that this equates to 4,020 storage servers. These are 4U servers with 45 or 60 drives in each with drives ranging in size from 4TB to 16TB. The smallest is 180TB of raw storage space (45 * 4TB drives) and the largest is 960TB of raw storage (60 * 16TB drives). These servers are a mix of Backblaze Storage Pods and third-party storage servers. It’s been a while since our last Storage Pod update, so look for something in late Q3 or early Q4.

Drive Stats at DEFCON

If you will be at DEFCON 30 in Las Vegas, I will be speaking live at the Data Duplication Village (DDV) at 1 p.m. on Friday, August 12th. The all-volunteer DDV is located in the lower level of the executive conference center of the Flamingo hotel. We’ll be talking about Drive Stats, SSDs, drive life expectancy, SMART stats, and more. I hope to see you there.

Never Miss the Drive Stats Report

Sign up for the Drive Stats Insiders newsletter and be the first to get Drive Stats data every quarter as well as the new Drive Stats SSD edition.

The Hard Drive Stats Data

The complete data set used to create the information used in this review is available on our Hard Drive Test Data page. You can download and use this data for free for your own purpose. All we ask are three things: 1) you cite Backblaze as the source if you use the data, 2) you accept that you are solely responsible for how you use the data, and 3) you do not sell this data to anyone; it is free.

If you want the tables and charts used in this report, you can download the .zip file from Backblaze B2 Cloud Storage which contains the .jpg and/or .xlsx files as applicable.

Good luck and let us know if you find anything interesting.

Want More Drive Stats Insights?

Check out our 2021 Year-end Drive Stats Report.

Interested in the SSD Data?

Read our first SSD-based Drive Stats Report.

Get a Clear Picture of Your Data Spread With Backblaze and DataIntell

Get a Clear Picture of Your Data Spread With Backblaze and DataIntell