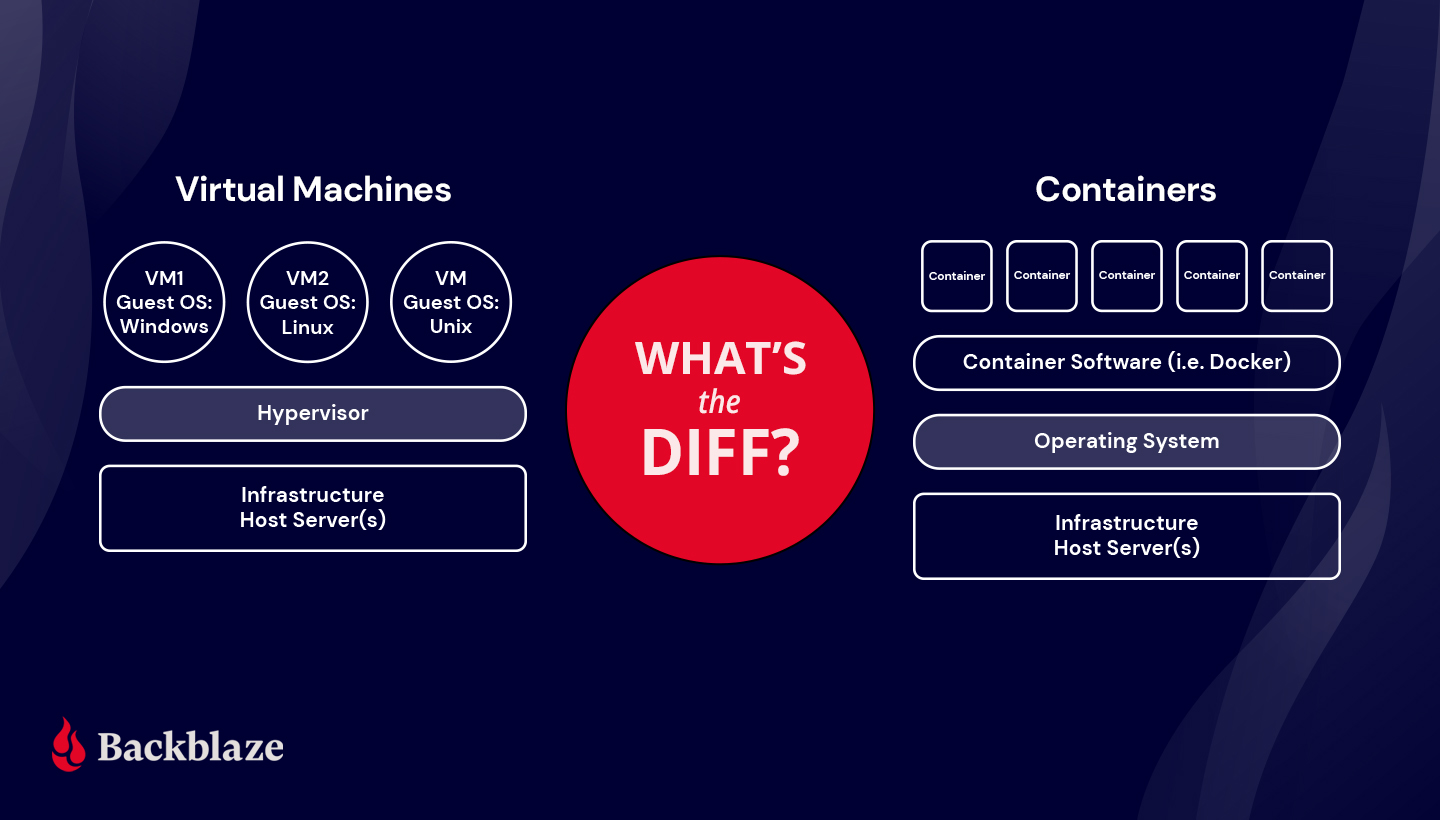

Both virtual machines (VMs) and containers help you optimize computer hardware and software resources via virtualization.

Containers have been around for a while, but their broad adoption over the past few years has fundamentally changed IT practices. On the other hand, VMs have enjoyed enduring popularity, maintaining their presence across data centers of various scales.

As you think about how to run services and build applications in the cloud, these virtualization techniques can help you do so faster and more efficiently. Today, we’re digging into how they work, how they compare to each other, and how to use them to drive your organization’s digital transformation.

First, the Basics: Some Definitions

What Is Virtualization?

Virtualization is the process of creating a virtual version or representation of computing resources like servers, storage devices, operating systems (OS), or networks that are abstracted from the physical computing hardware. This abstraction enables greater flexibility, scalability, and agility in managing and deploying computing resources. You can create multiple virtual computers from the hardware and software components of a single machine. You can think of it as essentially a computer-generated computer.

What Is a Hypervisor?

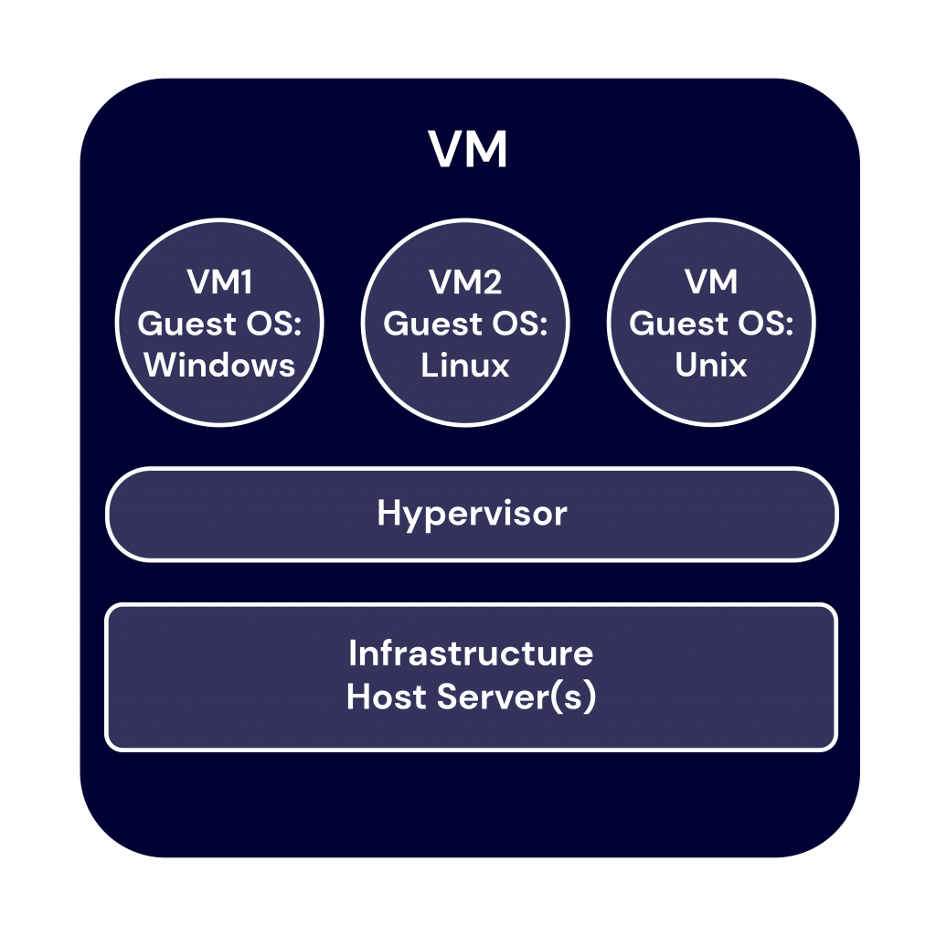

The software that enables the creation and management of virtual computing environments is called a hypervisor. It’s a lightweight software or firmware layer that sits between the physical hardware and the virtualized environments and allows multiple operating systems to run concurrently on a single physical machine. The hypervisor abstracts and partitions the underlying hardware resources, such as central processing units (CPUs), memory, storage, and networking, and allocates them to the virtual environments. You can think of the hypervisor as the middleman that pulls resources from the raw materials of your infrastructure and directs them to the various computing instances.

There are two types of hypervisors:

- Type 1, bare-metal hypervisors, run directly on the hardware.

- Type 2 hypervisors operate within a host operating system.

Hypervisors are fundamental to virtualization technology, enabling efficient utilization and management of computing resources.

VMs and Containers

What Are VMs?

The computer-generated computers that virtualization makes possible are known as virtual machines (VMs)—separate virtual computers running on one set of hardware or a pool of hardware. Each virtual machine acts as an isolated and self-contained environment, complete with its own virtual hardware components, including CPU, memory, storage, and network interfaces. The hypervisor allocates and manages resources, ensuring each VM has its fair share and preventing interference between them.

Each VM requires its own OS. Thus each VM can host a different OS, enabling diverse software environments and applications to exist without conflict on the same machine. VMs provide a level of isolation, ensuring that failures or issues within one VM do not impact others on the same hardware. They also enable efficient testing and development environments, as developers can create VM snapshots to capture specific system states for experimentation or rollbacks. VMs also offer the ability to easily migrate or clone instances, making it convenient to scale resources or create backups.

Since the advent of affordable virtualization technology and cloud computing services, IT departments large and small have embraced VMs as a way to lower costs and increase efficiencies.

VMs, however, can take up a lot of system resources. Each VM runs not just a full copy of an OS, but a virtual copy of all the hardware that the operating system needs to run. It’s why VMs are sometimes associated with the term “monolithic”—they’re single, all-in-one units commonly used to run applications built as single, large files. (The nickname, “monolithic,” will make a bit more sense after you learn more about containers below.) This quickly adds up to a lot of RAM and CPU cycles. They’re still economical compared to running separate actual computers, but for some use cases, particularly applications, it can be overkill, which led to the development of containers.

Benefits of VMs

- All OS resources available to apps.

- Well-established functionality.

- Robust management tools.

- Well-known security tools and controls.

- The ability to run different OS on one physical machine.

- Cost savings compared to running separate, physical machines.

Popular VM Providers

What Are Containers?

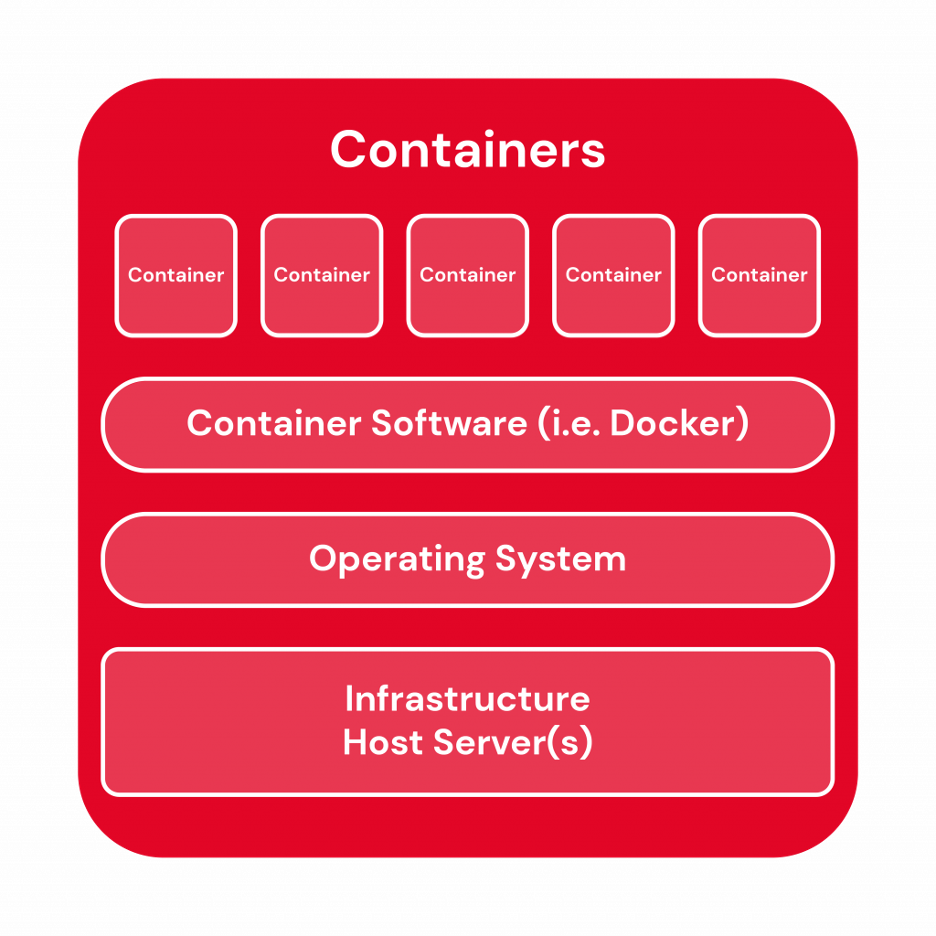

With containers, instead of virtualizing an entire computer like a VM, just the OS is virtualized.

Containers sit on top of a physical server and its host OS—typically Linux or Windows. Each container shares the host OS kernel and, usually, the binaries and libraries, too, resulting in more efficient resource utilization. (See below for definitions if you’re not familiar with these terms.) Shared components are read-only.

Why are they more efficient? Sharing OS resources, such as libraries, significantly reduces the need to reproduce the operating system code—a server can run multiple workloads with a single operating system installation. That makes containers lightweight and portable—they are only megabytes in size and take just seconds to start. What this means in practice is you can put two to three times as many applications on a single server with containers than you can with a VM. Compared to containers, VMs take minutes to run and are an order of magnitude larger than an equivalent container, measured in gigabytes versus megabytes.

Container technology has existed for a long time, but the launch of Docker in 2013 made containers essentially industry standard for application and software development. Technologies like Docker or Kubernetes to create isolated environments for applications. And containers solve the problem of environment inconsistency—the old “works on my machine” problem often encountered in software development and deployment.

Developers generally write code locally, say on their laptop, then deploy that code on a server. Any differences between those environments—software versions, permissions, database access, etc.—leads to bugs. With containers, developers can create a portable, packaged unit that contains all of the dependencies needed for that unit to run in any environment whether it’s local, development, testing, or production. This portability is one of containers’ key advantages.

Containers also offer scalability, as multiple instances of a containerized application can be deployed and managed in parallel, allowing for efficient resource allocation and responsiveness to changing demand.

Microservices architectures for application development evolved out of this container boom. With containers, applications could be broken down into their smallest component parts or “services” that serve a single purpose, and those services could be developed and deployed independently of each other instead of in one monolithic unit.

For example, let’s say you have an app that allows customers to buy anything in the world. You might have a search bar, a shopping cart, a buy button, etc. Each of those “services” can exist in their own container, so that if, say, the search bar fails due to high load, it doesn’t bring the whole thing down. And that’s how you get your Prime Day deals today.

More Definitions: Binaries, Libraries, and Kernels

Binaries: In general, binaries are non-text files made up of ones and zeros that tell a processor how to execute a program.

Libraries: Libraries are sets of prewritten code that a program can use to do either common or specialized things. They allow developers to avoid rewriting the same code over and over.

Kernels: Kernels are the ringleaders of the OS. They’re the core programming at the center that controls all other parts of the operating system.

Container Tools

Linux Containers (LXC): Commonly known as LXC, these are the original Linux container technology. LXC is a Linux operating system-level virtualization method for running multiple isolated Linux systems on a single host.

Docker: Originally conceived as an initiative to develop LXC containers for individual applications, Docker revolutionized the container landscape by introducing significant enhancements to improve their portability and versatility. Gradually evolving into an independent container runtime environment, Docker emerged as a prominent Linux utility, enabling the seamless creation, transportation, and execution of containers with remarkable efficiency.

Kubernetes: Kubernetes, though not a container software in its essence, serves as a vital container orchestrator. In the realm of cloud-native architecture and microservices, where applications deploy numerous containers ranging from hundreds to thousands or even billions, Kubernetes plays a crucial role in automating the comprehensive management of these containers. While Kubernetes relies on complementary tools like Docker to function seamlessly, it’s such a big name in the container space it wouldn’t be a container post without mentioning it.

Benefits of Containers

- Reduced IT management resources.

- Faster spin ups.

- Smaller size means one physical machine can host many containers.

- Reduced and simplified security updates.

- Less code to transfer, migrate, and upload workloads.

What’s the Diff: VMs vs. Containers

The virtual machine versus container debate gets at the heart of the debate between traditional IT architecture and contemporary DevOps practices.

VMs have been, and continue to be, tremendously popular and useful, but sadly for them, they now carry the term “monolithic” with them wherever they go like a 25-ton Stonehenge around the neck. Containers, meanwhile, pushed the old gods aside, bedecked in the glittering mantle of “microservices.” Cute.

To offer another quirky tech metaphor, VMs are to containers what glamping is to ultralight backpacking. Both equip you with everything you need to survive in the wilds of virtualization. Both are portable, but containers will get you farther, faster, if that’s your goal. And while VMs bring everything and the kitchen sink, containers leave the toothbrush at home to cut weight. To make a more direct comparison, we’ve consolidated the differences into a handy table:

| VMs | Containers |

|---|---|

| Heavyweight. | Lightweight. |

| Limited performance. | Native performance. |

| Each VM runs in its own OS. | All containers share the host OS. |

| Hardware-level virtualization. | OS virtualization. |

| Startup time in minutes. | Startup time in milliseconds. |

| Allocates required memory. | Requires less memory space. |

| Fully isolated and hence more secure. | Process-level isolation, possibly less secure. |

Uses for VMs vs. Uses for Containers

Both containers and VMs have benefits and drawbacks, and the ultimate decision will depend on your specific needs.

When it comes to selecting the appropriate technology for your workloads, virtual machines (VMs) excel in situations where applications demand complete access to the operating system’s resources and functionality. When you need to run multiple applications on servers, or have a wide variety of operating systems to manage, VMs are your best choice. If you have an existing monolithic application that you don’t plan to or need to refactor into microservices, VMs will continue to serve your use case well.

Containers are a better choice when your biggest priority is maximizing the number of applications or services running on a minimal number of servers and when you need maximum portability. If you are developing a new app and you want to use a microservices architecture for scalability and portability, containers are the way to go. Containers shine when it comes to cloud-native application development based on a microservices architecture.

You can also run containers on a virtual machine, making the question less of an either/or and more of an exercise in understanding which technology makes the most sense for your workloads.

In a nutshell:

- VMs help companies make the most of their infrastructure resources by expanding the number of machines you can squeeze out of a finite amount of hardware and software.

- Containers help companies make the most of the development resources by enabling microservices and DevOps practices.

Are You Using VMs, Containers, or Both?

If you are using VMs or containers, we’d love to hear from you about what you’re using and how you’re using them. Drop a note in the comments.

VM vs. Containers FAQs

A Virtual machine (VM) is a simulated computing environment that emulates an entire operating system with its dependencies and resources. VMs run on a hypervisor, a software layer that sits between the underlying hardware and the virtualized environment. They provide strong isolation but are resource-intensive. Containers, on the other hand, sit on top of a physical server plus its host OS kernel and libraries, making them lightweight and fast to start. They offer efficient resource utilization but provide weaker isolation. Containers are ideal for deploying lightweight, scalable applications, while VMs are suitable for running multiple applications with different operating systems on a single server.

Containers are more lightweight compared to virtual machines (VM). Containers share the host OS kernel and libraries, eliminating the need to run a separate OS instance for each container like you would for a VM. This significantly reduces their overhead and resource requirements compared to VMs, which need to run a complete OS stack. Containers start quickly and consume fewer system resources, making them ideal for deploying and scaling applications efficiently. VMs, on the other hand, require more resources and have a longer startup time due to the need to boot an entire virtualized OS.

Virtual machines (VM) provide stronger isolation compared to containers. Because each VM runs on its own dedicated OS, it creates a complete virtualized environment. This isolation ensures that applications and processes within one VM are isolated from others, providing enhanced security. Containers, while offering some level of isolation, share the host operating system, which can lead to potential security vulnerabilities if not properly managed. So, in terms of isolation, VMs are generally considered to provide better isolation than containers.

Containers are generally faster compared to virtual machines (VM). Since containers sit atop a host OS kernel and libraries, they have quick startup times and efficient resource utilization. They can start within seconds and have minimal overhead. In contrast, VMs require booting an entire virtualized OS, resulting in longer startup times and higher resource consumption.

Virtual machines (VMs) work well for scenarios where strong isolation, security, and compatibility with different operating systems are required. They are commonly used for running legacy applications, testing different operating systems or software configurations, and hosting complex software stacks. VMs are beneficial in situations where the application’s dependencies are specific and might conflict with the host system. Because they use resources more efficiently and are easy and quick to deploy, containers, on the other hand, are ideal for deploying lightweight, scalable applications, and microservices architectures.

How to Use Cloud Replication to Automate Environments

How to Use Cloud Replication to Automate Environments