Welcome back to AI 101, a series dedicated to breaking down the realities of artificial intelligence (AI). Previously we’ve defined artificial intelligence, deep learning (DL), and machine learning (ML) and dove into the types of processors that make AI possible. Today we’ll talk about one of the biggest limitations of AI adoption—how much it costs. Experts have already flagged that the significant investment necessary for AI can cause antitrust concerns and that AI is driving up costs in data centers.

To that end, we’ll talk about:

- Factors that impact the cost of AI.

- Some real numbers about the cost of AI components.

- The AI tech stack and some of the industry solutions that have been built to serve it.

- And, uncertainty.

Defining AI: Complexity and Cost Implications

While ChatGPT, DALL-E, and the like may be the most buzz-worthy of recent advancements, AI has already been a part of our daily lives for several years now. In addition to generative AI models, examples include virtual assistants like Siri and Google Home, fraud detection algorithms in banks, facial recognition software, URL threat analysis services, and so on.

That brings us to the first challenge when it comes to understanding the cost of AI: The type of AI you’re training—and how complex a problem you want it to solve—has a huge impact on the computing resources needed and the cost, both in the training and in the implementation phases. AI tasks are hungry in all ways: they need a lot of processing power, storage capacity, and specialized hardware. As you scale up or down in the complexity of the task you’re doing, there’s a huge range in the types of tools you need and their costs.

To understand the cost of AI, several other factors come into play as well, including:

- Latency requirements: How fast does the AI need to make decisions? (e.g. that split second before a self-driving car slams on the brakes.)

- Scope: Is the AI solving broad-based or limited questions? (e.g. the best way to organize this library vs. how many times is the word “cat” in this article.)

- Actual human labor: How much oversight does it need? (e.g. does a human identify the cat in cat photos, or does the AI algorithm identify them?)

- Adding data: When, how, and what quantity new data will need to be ingested to update information over time?

This is by no means an exhaustive list, but it gives you an idea of the considerations that can affect the kind of AI you’re building and, thus, what it might cost.

The Big Three AI Cost Drivers: Hardware, Storage, and Processing Power

In simple terms, you can break down the cost of running an AI to a few main components: hardware, storage, and processing power. That’s a little bit simplistic, and you’ll see some of these lines blur and expand as we get into the details of each category. But, for our purposes today, this is a good place to start to understand how much it costs to ask a bot to create a squirrel holding a cool guitar.

First Things First: Hardware Costs

Running an AI takes specialized processors that can handle complex processing queries. We’re early in the game when it comes to picking a “winner” for specialized processors, but these days, the most common processor is a graphical processing unit (GPU), with Nvidia’s hardware and platform as an industry favorite and front-runner.

The most common “workhorse chip” of AI processing tasks, the Nvidia A100, starts at about $10,000 per chip, and a set of eight of the most advanced processing chips can cost about $300,000. When Elon Musk wanted to invest in his generative AI project, he reportedly bought 10,000 GPUs, which equates to an estimated value in the tens of millions of dollars. He’s gone on record as saying that AI chips can be harder to get than drugs.

Google offers folks the ability to rent their TPUs through the cloud starting at $1.20 per chip hour for on-demand service (less if you commit to a contract). Meanwhile, Intel released a sub-$100 USB stick with a full NPU that can plug into your personal laptop, and folks have created their own models at home with the help of open sourced developer toolkits. Here’s a guide to using them if you want to get in the game yourself.

Clearly, the spectrum for chips is vast—from under $100 to millions—and the landscape for chip producers is changing often, as is the strategy for monetizing those chips—which leads us to our next section.

Using Third Parties: Specialized Problems = Specialized Service Providers

Building AI is a challenge with so many moving parts that, in a business use case, you eventually confront the question of whether it’s more efficient to outsource it. It’s true of storage, and it’s definitely true of AI processing. You can already see one way Google answered that question above: create a network populated by their TPUs, then sell access.

Other companies specialize in broader or narrower parts of the AI creation and processing chain. Just to name a few, diverse companies: there’s Hugging Face, Inflection AI, and Vultr. Those companies have a wide array of product offerings and resources from open source communities like Hugging Face that provide a menu of models, datasets, no-code tools, and (frankly) rad developer experiments to bare metal servers like Vultr that enhance your compute resources. How resources are offered also exist on a spectrum, including proprietary company resources (i.e. Nvidia’s platform), open source communities (looking at you, Hugging Face), or a mix of the two.

This means that, whichever piece of the AI tech stack you’re considering, you have a high degree of flexibility when you’re deciding where and how much you want to customize and where and how to implement an out-of-the box solution.

Ballparking an estimate of what any of that costs would be so dependent on the particular model you want to build and the third-party solutions you choose that it doesn’t make sense to do so here. But, it suffices to say that there’s a pretty narrow field of folks who have the infrastructure capacity, the datasets, and the business need to create their own network. Usually it comes back to any combination of the following: whether you have existing infrastructure to leverage or are building from scratch, if you’re going to sell the solution to others, what control over research or dataset you have or want, how important privacy is and how you’re incorporating it into your products, how fast you need the model to make decisions, and so on.

Welcome to the Spotlight, Storage

And, hey, with all that, let’s not forget storage. At the most basic level of consideration, AI uses a ton of data. How much? Going knowledge says at least an order of magnitude more examples than the problem presented to train an AI model. That means you want 10 times more examples than parameters.

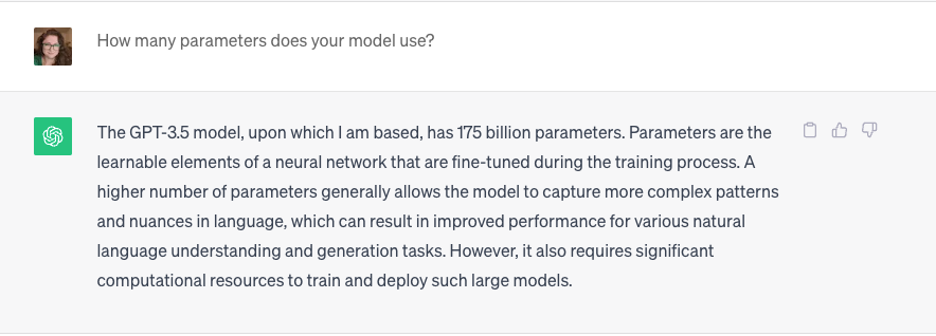

Parameters and Hyperparameters

The easiest way to think of parameters is to think of them as factors that control how an AI makes a decision. More parameters = more accuracy. And, just like our other AI terms, the term can be somewhat inconsistently applied. Here’s what ChatGPT has to say for itself:

That 10x number is just the amount of data you store for the initial training model—clearly the thing learns and grows, because we’re talking about AI.

Preserving both your initial training algorithm and your datasets can be incredibly useful, too. As we talked about before, the more complex an AI, the higher the likelihood that your model will surprise you. And, as many folks have pointed out, deciding whether to leverage an already-trained model or to build your own doesn’t have to be an either/or—oftentimes the best option is to fine-tune an existing model to your narrower purpose. In both cases, having your original training model stored can help you roll back and identify the changes over time.

The size of the dataset absolutely affects costs and processing times. The best example is that ChatGPT, everyone’s favorite model, has been rocking GPT-3 (or 3.5) instead of GPT-4 on the general public release because GPT-4, which works from a much larger, updated dataset than GPT-3, is too expensive to release to the wider public. It also returns results much more slowly than GPT-3.5, which means that our current love of instantaneous search results and image generation would need an adjustment.

And all of that is true because GPT-4 was updated with more information (by volume), more up-to-date information, and the model was given more parameters to take into account for responses. So, it has to both access more data per query and use more complex reasoning to make decisions. That said, it also reportedly has much better results.

Storage and Cost

What are the real numbers to store, say, a primary copy of an AI dataset? Well, it’s hard to estimate, but we can ballpark that, if you’re training a large AI model, you’re going to have at a minimum tens of gigabytes of data and, at a maximum, petabytes. OpenAI considers the size of its training database proprietary information, and we’ve found sources that cite that number as anywhere from 17GB to 570GB to 45TB of text data.

That’s not actually a ton of data, and, even taking the highest number, it would only cost $225 per month to store that data in Backblaze B2 (45TB * $5/TB/mo), for argument’s sake. But let’s say you’re training an AI on video to, say, make a robot vacuum that can navigate your room or recognize and identify human movement. Your training dataset could easily reach into petabyte scale (for reference, one petabyte would cost $5,000 per month in Backblaze B2). Some research shows that dataset size is trending up over time, though other folks point out that bigger is not always better.

On the other hand, if you’re the guy with the Intel Neural Compute stick we mentioned above and a Raspberry Pi, you’re talking the cost of the ~$100 AI processor, ~$50 for the Raspberry Pi, and any incidentals. You can choose to add external hard drives, network attached storage (NAS) devices, or even servers as you scale up.

Storage and Speed

Keep in mind that, in the above example, we’re only considering the cost of storing the primary dataset, and that’s not very accurate when thinking about how you’d be using your dataset. You’d also have to consider temporary storage for when you’re actually training the AI as your primary dataset is transformed by your AI algorithm, and nearly always you’re splitting your primary dataset into discrete parts and feeding those to your AI algorithm in stages—so each of those subsets would also be stored separately. And, in addition to needing a lot of storage, where you physically locate that storage makes a huge difference to how quickly tasks can be accomplished. In many cases, the difference is a matter of seconds, but there are some tasks that just can’t handle that delay—think of tasks like self-driving cars.

For huge data ingest periods such as training, you’re often talking about a compute process that’s assisted by powerful, and often specialized, supercomputers, with repeated passes over the same dataset. Having your data physically close to those supercomputers saves you huge amounts of time, which is pretty incredible when you consider that it breaks down to as little as milliseconds per task.

One way this problem is being solved is via caching, or creating temporary storage on the same chips (or motherboards) as the processor completing the task. Another solution is to keep the whole processing and storage cluster on-premises (at least while training), as you can see in the Microsoft-OpenAI setup or as you’ll often see in universities. And, unsurprisingly, you’ll also see edge computing solutions which endeavor to locate data physically close to the end user.

While there can be benefits to on-premises or co-located storage, having a way to quickly add more storage (and release it if no longer needed), means cloud storage is a powerful tool for a holistic AI storage architecture—and can help control costs.

And, as always, effective backup strategies require at least one off-site storage copy, and the easiest way to achieve that is via cloud storage. So, any way you slice it, you’re likely going to have cloud storage touch some part of your AI tech stack.

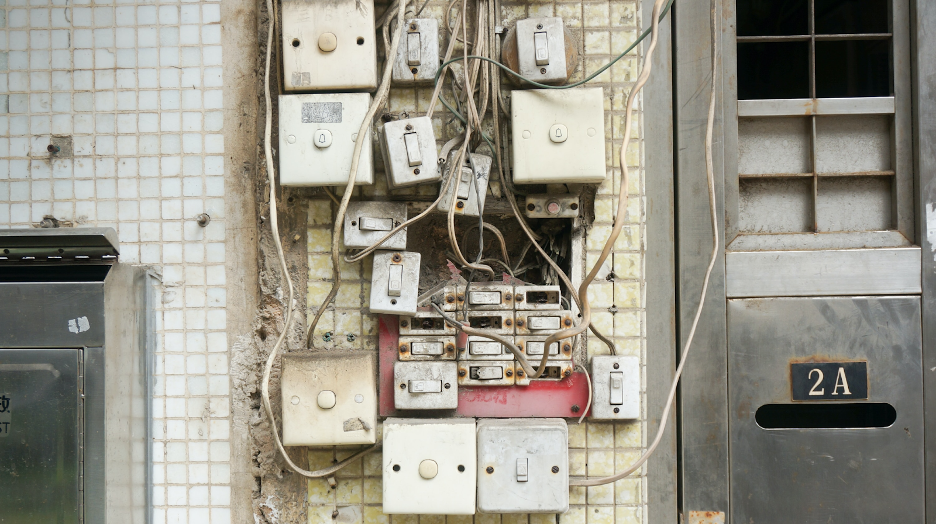

What Hardware, Processing, and Storage Have in Common: You Have to Power Them

Here’s the short version: any time you add complex compute + large amounts of data, you’re talking about a ton of money and a ton of power to keep everything running.

Fortunately for us, other folks have done the work of figuring out how much this all costs. This excellent article from SemiAnalysis goes deep on the total cost of powering searches and running generative AI models. The Washington Post cites Dylan Patel (also of SemiAnalysis) as estimating that a single chat with ChatGPT could cost up to 1,000 times as much as a simple Google search. Those costs include everything we’ve talked about above—the capital expenditures, data storage, and processing.

Consider this: Google spent several years putting off publicizing a frank accounting of their power usage. When they released numbers in 2011, they said that they use enough electricity to power 200,000 homes. And that was in 2011. There are widely varying claims for how much a single search costs, but even the most conservative say .03 Wh of energy. There are approximately 8.5 billion Google searches per day. (That’s just an incremental cost by the way—as in, how much does a single search cost in extra resources on top of how much the system that powers it costs.)

Power is a huge cost in operating data centers, even when you’re only talking about pure storage. One of the biggest single expenses that affects power usage is cooling systems. With high-compute workloads, and particularly with GPUs, the amount of work the processor is doing generates a ton more heat—which means more money in cooling costs, and more power consumed.

So, to Sum Up

When we’re talking about how much an AI costs, it’s not just about any single line item cost. If you decide to build and run your own models on-premises, you’re talking about huge capital expenditure and ongoing costs in data centers with high compute loads. If you want to build and train a model on your own USB stick and personal computer, that’s a different set of cost concerns.

And, if you’re talking about querying a generative AI from the comfort of your own computer, you’re still using a comparatively high amount of power somewhere down the line. We may spread that power cost across our national and international infrastructures, but it’s important to remember that it’s coming from somewhere—and that the bill comes due, somewhere along the way.

The SSD Edition: 2023 Drive Stats Mid-Year Review

The SSD Edition: 2023 Drive Stats Mid-Year Review